HR departments love the much-hyped chatbot for its productivity shortcuts, but are they too trusting of its responses?

This report is part one in a series on HR and chatbots. Part two, about how chatbots are disrupting HR service delivery, can be found here.

ChatGPT—the conversational chatbot from OpenAI that has generated a ton of buzz since its launch in late 2022—is already proving its worth in the world of HR. In our survey of 300 HR employees in the U.S.*, 73% say they’ve used ChatGPT or a similar chatbot for their job.

The speed at which these chatbots can generate HR content (such as job descriptions and employee handbook material) with minimal effort has HR leaders salivating over the possibilities. The technology isn’t perfect, however. By OpenAI’s own admission, ChatGPT “sometimes writes plausible-sounding but incorrect or nonsensical answers,” meaning users can easily share false information with employees or job seekers if they aren’t paying attention.[1]

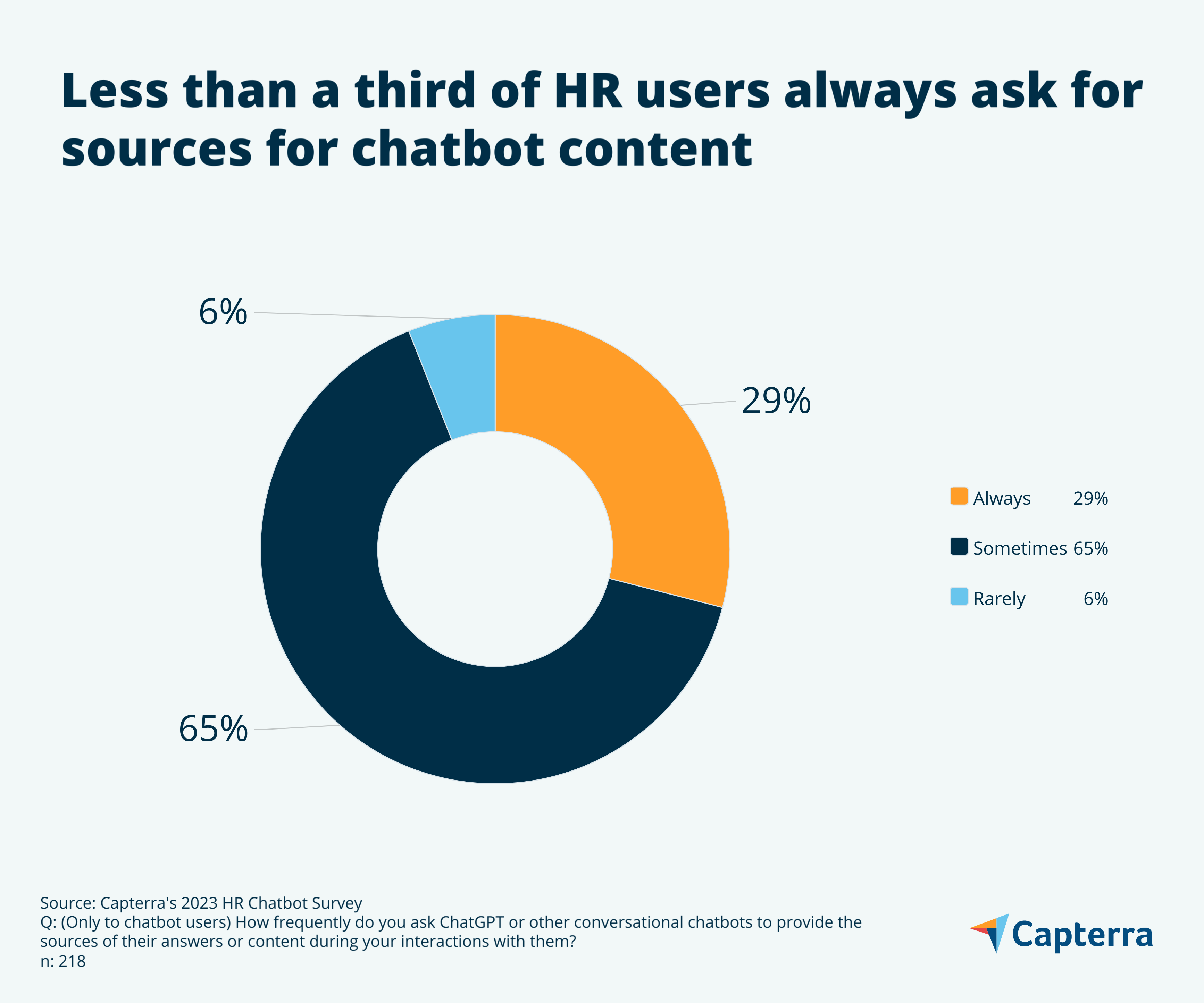

HR departments should be rigorous about fact-checking these new tools to ensure they don’t get burned, but instead we find that only 29% of HR workers who have used ChatGPT or other generative AI chatbots for work always ask the chatbot to cite sources for the content it provides.

In this report, we’ll dive into our survey results to learn what HR really thinks of this new wave of chatbots and offer best practices for how your department should (and should not) use them to ensure you get great content every time.

/ Key findings

1. ChatGPT sentiment among HR is overwhelmingly positive: 91% of HR workers who have used ChatGPT or other generative AI chatbots for work say the quality of the bot's responses are "good" or "excellent", and 97% of users agree that these chatbots save time so they can focus on other priorities.

2. Using ChatGPT to write your cover letter? HR says goes for it: 86% of HR workers who have used ChatGPT or other generative AI chatbots for work say they'd view a job applicant more positively if they submitted a resume or cover letter that was at least partially written by a chatbot.

3. HR users aren’t consistent when asking for source citation: Only 29% of HR workers who have used ChatGPT or other generative AI chatbots for work say they always ask the chatbot to cite the sources for the content it provides.

HR employees say ChatGPT is a legitimate gamechanger

ChatGPT, and other generative AI chatbots like it, are trained on mountains of text data to understand the relationships between different words and phrases. They then use this training data to craft unique responses to user queries in real time. With minimal input and zero cost, these chatbots can solve math problems, write code, and even tell stories—all in a fairly convincing “human-like” manner.

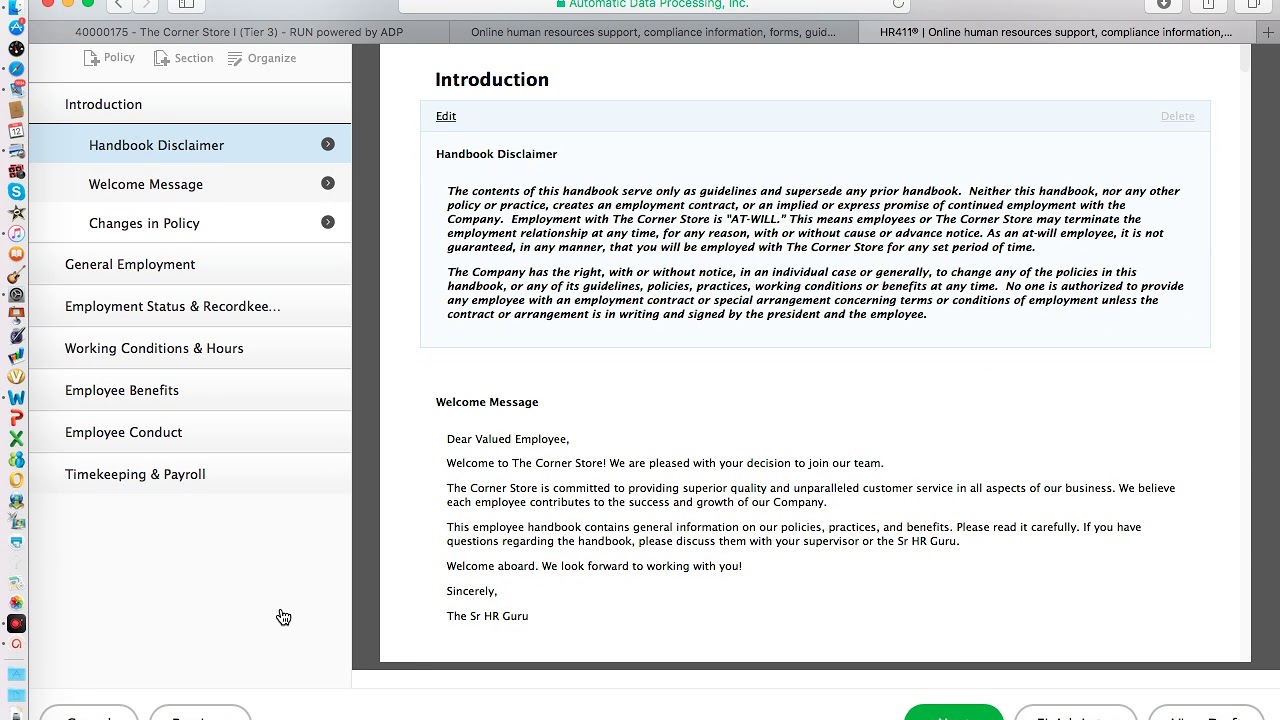

ChatGPT’s description of itself [2]

The benefits of such a tool in the working world were made clear in a recent study by MIT: Test subjects who used ChatGPT for a number of writing and editing tasks completed everything 37% faster than those who didn’t use ChatGPT, and without a significant drop in quality.[3]

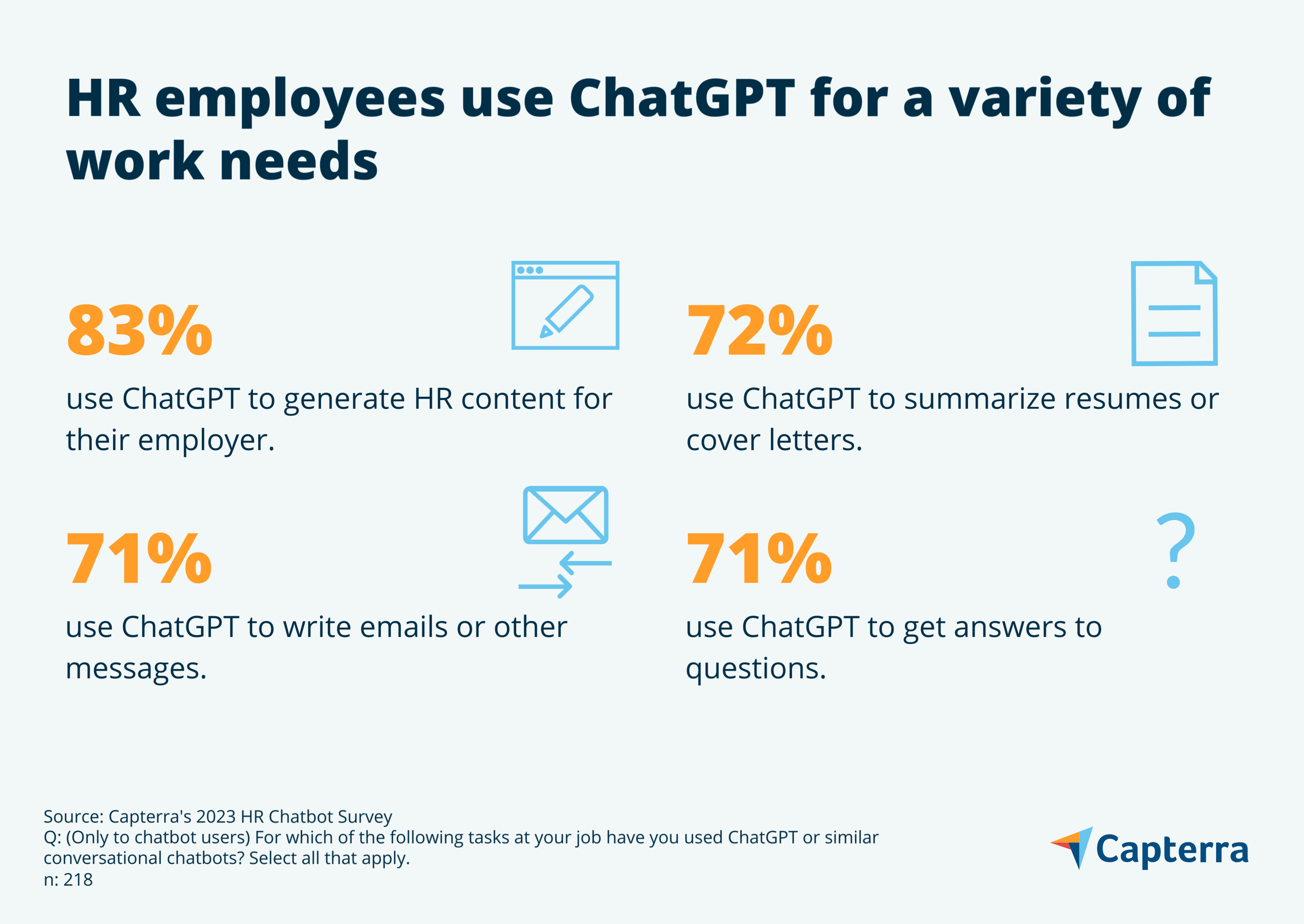

To say HR departments have taken notice would be an understatement. Though these tools haven't been around long, 73% of surveyed HR workers say they've already used ChatGPT or a similar chatbot on the job for a variety of tasks, such as getting detailed answers to questions, writing emails, summarizing applicant resumes, and—most often—generating HR content for their employer.

And so far, reviews offer nearly universal praise. Not only do HR users say these tools generate content that is high-quality—91% of users rate the quality of their bot's responses as "good" or "excellent"—but almost all agree that the time-savings from using these chatbots to create everything from company policy documentation to job postings allows them to focus on other priorities.

In fact, HR users are so fond of these tools that they don't mind if job seekers use them to save time. Asked how their opinion of an applicant would change if they discovered the applicant had used a chatbot to help write their cover letter or resume, 86% said it would positively affect their opinion of the applicant.

If you've used these chatbots already, you’ve probably come away just as impressed. However, it doesn't take much stress testing to realize these tools have a few flaws beneath the surface.

Users don’t always check ChatGPT’s sources (but they really should)

HR employees in our survey love what ChatGPT and other chatbots like it can do—so much so that most have become incredibly trusting of the responses they're being given. When asked how frequently they request sources for the content provided by generative AI chatbots, 29% of surveyed HR users say they always ask for them, 65% sometimes ask, and six percent rarely ask for them.

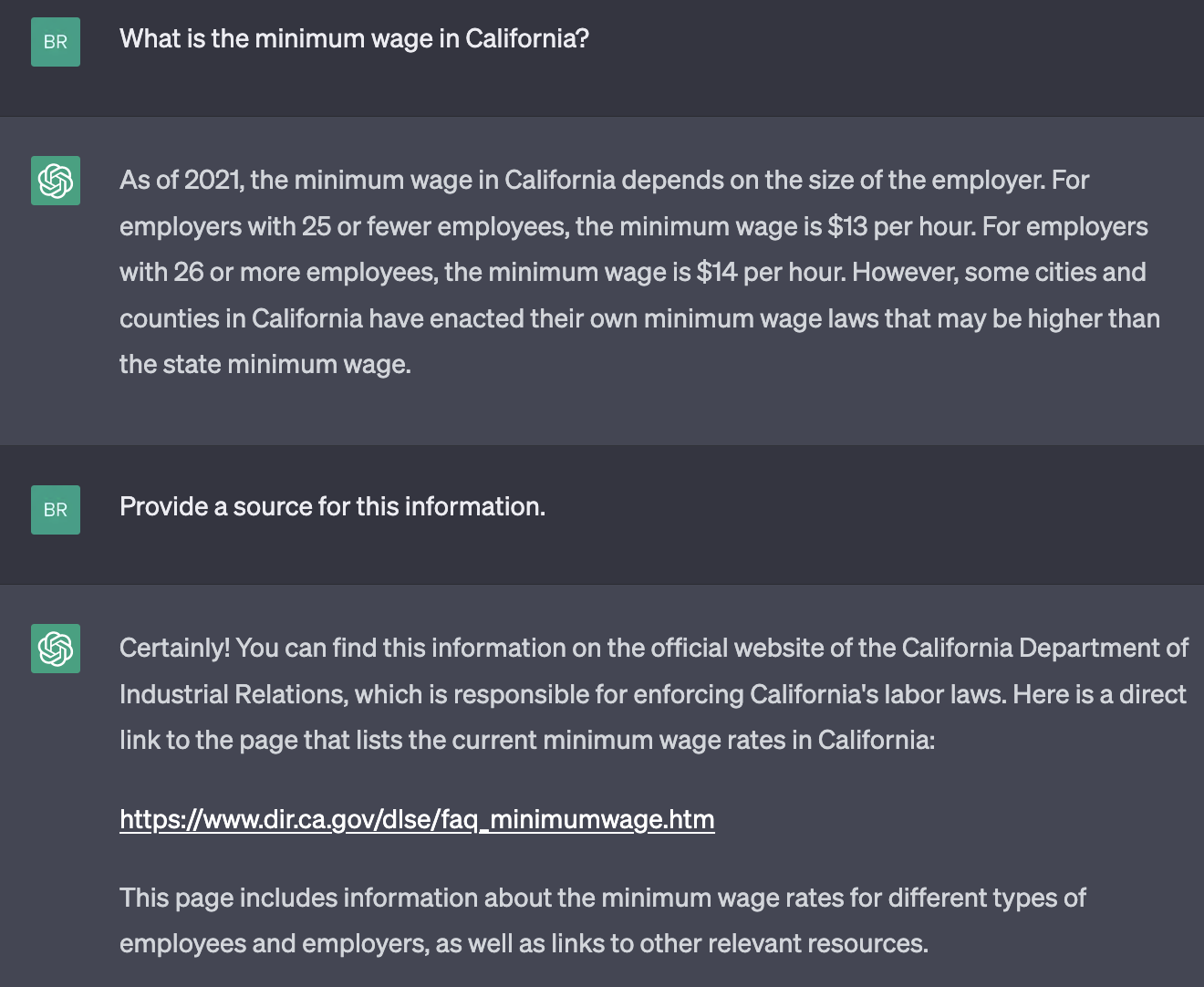

This lack of diligence is alarming when you consider some of ChatGPT's potential pitfalls. In one example, I simply asked ChatGPT what the minimum wage was in California. It said that, as of 2021, the minimum wage is $13 an hour for employers with 25 or fewer employees, and $14 an hour for employers with 26 or more employees.

This answer is “plausible-sounding,” as OpenAI said it would be. An HR user could easily be convinced that it’s correct. However, the text data that ChatGPT was trained on ends in 2021—the year it cited in its answer. ChatGPT can’t provide up-to-date information after 2021, such as the fact that California’s minimum wage is actually $15.50 for all employers as of 2023.[4]

Unless users are aware of this limitation in ChatGPT’s dataset, the only way they would discover this error is by following up and asking for a source. When I did so, ChatGPT cited California’s Department of Industrial Relations website—which has correct and up-to-date minimum wage information that differs from ChatGPT’s incorrect response.

ChatGPT’s source for its minimum wage answer[5]

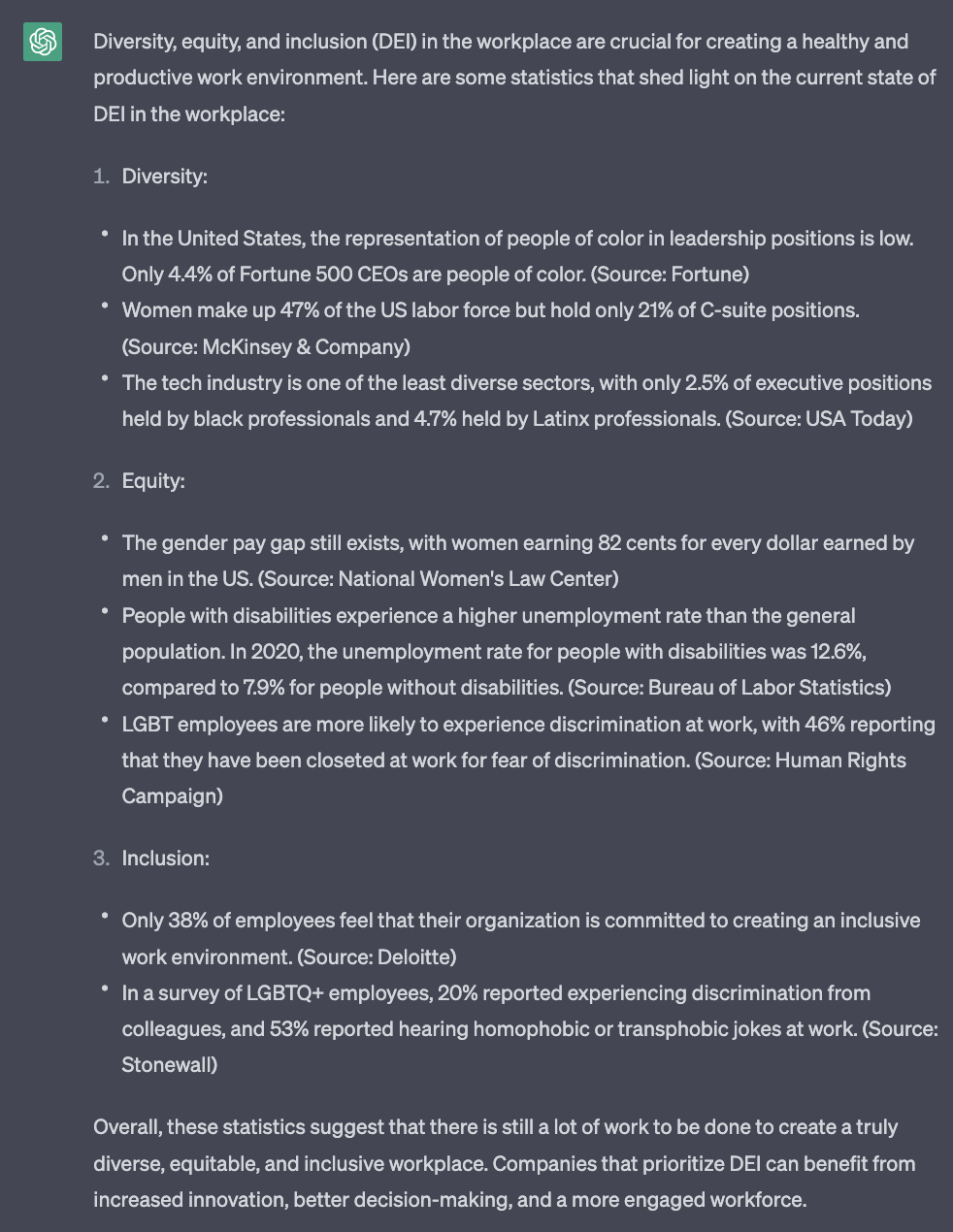

Employment law isn’t the only subject where errors like this can happen. For example, I asked ChatGPT to give me some statistics about diversity, equity, and inclusion (DEI) in the workplace (content that could be useful when creating DEI resources for an organization). In total, the chatbot responded with eight different stats with sources—about how many Fortune 500 CEOs are people of color, the gender pay gap, the percentage of LGBTQ employees that have experienced discrimination at work, and more.

Again, things look pretty good at first glance. When I asked for links to the sources of these stats, however, four links went to web pages that don’t exist anymore, two links went to sources with updated stats that don’t match the numbers ChatGPT provided, and one link redirected to an unrelated article. Only one source out of eight was accurate—another result of ChatGPT’s dated dataset.

ChatGPT provides stats on DEI[6]

Besides checking sources, HR users also need to be on the lookout for biased language that can creep into chatbot responses, even from seemingly innocent prompts. In one example, a user asked ChatGPT for performance feedback on different employees. When asked to write feedback for a “bubbly receptionist,” ChatGPT assumed the worker was a woman, while an “unusually strong construction worker” was assumed to be male.[7] A separate study found that job adverts written by ChatGPT were 40% more biased than those written by humans.[8]

If you or someone in your department are using these generative AI tools, the takeaway from all this evidence is clear: While the time savings from these chatbots are too good to ignore, taking the content they provide at face value without further interrogation or refinement can end up harming the employee experience and your employer brand.

Did you know there's software that can aid your DEI efforts?

Explore diversity, equity, and inclusion tools for your HR department.

6 tips for using chatbots to generate HR content

As more and more HR workers generate more and more HR content using these tools, the possibility for departments to share inaccurate or biased information with employees and job seekers increases dramatically. That being said, with a few best practices and bits of knowledge under your belt, you can continue to take advantage of these cutting edge tools with minimal risk to the organization.

Besides being sure to always check the sources for any information being given by chatbots, here are some additional tips.

1. Check your HR software first

Yes, my first tip for using chatbots is to not use a chatbot at all, but hear me out.

For a lot of boilerplate HR content, especially employee handbook material or performance reviews, HR software systems often offer templates for this type of content already. If you have software in place—be it a payroll system, a performance management system, or a more comprehensive talent management suite—it’s a good idea to check what kind of templates it has before turning to a chatbot.

Here, the HR specialization offered by HR software vendors really does help. Not only is content more HR-appropriate in style, but vendors are keen on keeping these templates up-to-date, so there’s less of a chance you’ll share wrong information.

2. Work on your prompt engineering skills

There’s a reason why prompt engineering—which is the discovery of inputs that yield desirable or useful results with AI tools—is one of the hottest career fields right now. Bad prompts with these chatbots will result in bad content. Period.

Luckily, it doesn’t take much finesse to turn a bad response into a workable one. For example, you can ask ChatGPT to write a job description without biased language, and it will do so more often than not. You can also use modifiers to get content in a certain tone (humorous, professional, etc.) or within certain format limits (write three paragraphs, write a bulleted list, etc.).

It’s also good to remember that ChatGPT prompts are iterative within the same session. If the bot’s first attempt at something isn’t up to snuff, you can ask it to refine what it’s already created using differing wording or new parameters without starting over.

3. Consider paying for GPT-4

Right now, the free version of ChatGPT that everyone is using is based on GPT-3.5. But a more advanced version, called GPT-4, is available if you’re subscribed to ChatGPT’s premium offering, called ChatGPT Plus.

For $20 a month, not only does GPT-4 come with a few extra features—like the ability to upload images to generate captions or do analysis—but OpenAI says GPT-4 is 40% more likely to produce factual responses than GPT-3.5.[10] If your department plans to use this tool or other tools like it long-term, the added cost for more accurate content is probably worth it.

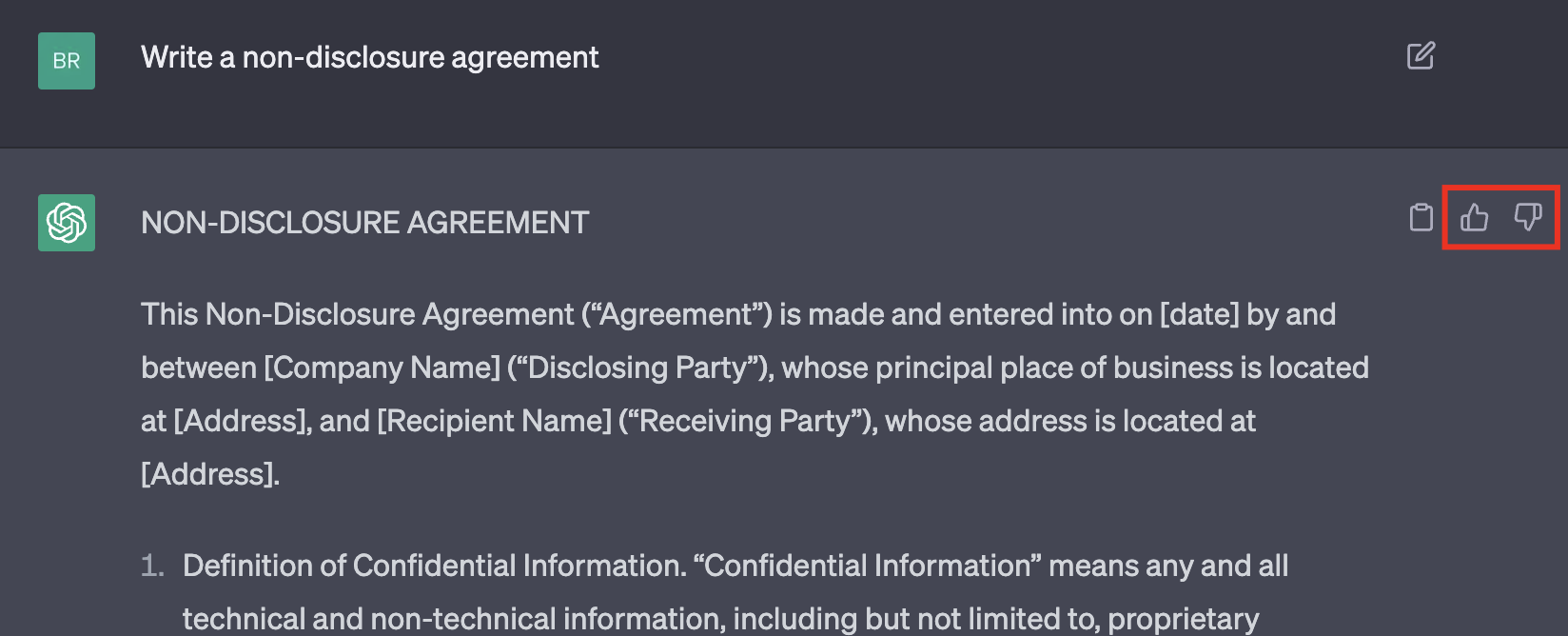

4. Use ChatGPT’s feedback feature

OpenAI is working to make ChatGPT more useful and less biased, but needs your help. If you want ChatGPT to get better over time, use the thumbs-up and thumbs-down buttons next to any content that’s generated to let OpenAI know what you thought of it.

With the thumbs-up button, you can leave a comment explaining why the content was good or useful, while the thumb-down button has options to flag the response as harmful, untrue, or unhelpful.

ChatGPT’s feedback feature[11]

5. Don’t use chatbots for employment law

For content that’s relatively evergreen, like employee engagement surveys or performance review templates, the risk of using a chatbot like ChatGPT is relatively low. For something like employment law, however, which is constantly changing and needs to be up-to-date, the risk goes up considerably.

At this point in time, it’s best to avoid using chatbots for anything related to employment law or compliance altogether.

6. Treat everything as a first draft

Besides the possibility of having inaccurate or biased language, chatbot content by its very nature will lack your company’s unique personality—a vital component to have if you want to entice job seekers or build your company culture.

As awesome as it is that these chatbots can give you the building blocks for company content so quickly, they’re exactly that: building blocks. Whatever content you’re trying to create with these tools, treat it as a first draft, and use subsequent passes to eliminate errors and add that necessary human touch.

Chatbots are here to stay, but HR needs to check their rose-tinted glasses

It’s safe to say generative AI chatbots aren’t going anywhere anytime soon. Microsoft has already folded ChatGPT into its Bing search engine, Google is frantically trying to keep pace by enhancing its own search engine with its chatbot (Bard), and other companies like Amazon and Alibaba are building their own chatbots to improve the customer experience.

When you see these chatbots in action, it’s easy to understand why we can’t put the genie back in the lamp. They represent a generational leap in content production efficiency, but HR departments need to be careful. Move too fast, or take the content these tools generate at face value, and you’ll suffer the consequences.

If you liked this report, check out some of our other HR content:

Note: The application shown in this article is an example to show a feature in context and is not intended as an endorsement or recommendation. It has been obtained from sources believed to be reliable at the time of publication.